Configural frequency analysis: Difference between revisions

→Basic idea of the CFA algorithm: syntax correction~~~~ejr |

en>Bender235 |

||

| Line 1: | Line 1: | ||

In [[computing]], '''quadruple precision''' (also commonly shortened to '''quad precision''') is a binary [[floating-point]]-based [[computer number format]] that occupies 16 bytes (128 bits) in computer memory and whose precision is about twice the 53-bit [[Double-precision floating-point format|double precision]]. | |||

This 128 bit quadruple precision is designed not only for applications requiring results in higher than double precision,<ref>{{cite web|url=http://crd.lbl.gov/~dhbailey/dhbpapers/dhb-jmb-acat08.pdf|title=High-Precision Computation and Mathematical Physics|author=David H. Bailey and Jonathan M. Borwein|date=July 6, 2009}}</ref> but also, as a primary function, to allow the computation of double precision results more reliably and accurately by minimising overflow and [[round-off error]]s in intermediate calculations and scratch variables: as [[William Kahan]], primary architect of the original IEEE-754 floating point standard noted, "For now the [[extended precision#x86 Architecture Extended Precision Format|10-byte Extended format]] is a tolerable compromise between the value of extra-precise arithmetic and the price of implementing it to run fast; very soon two more bytes of precision will become tolerable, and ultimately a 16-byte format... That kind of gradual evolution towards wider precision was already in view when [[IEEE 754|IEEE Standard 754 for Floating-Point Arithmetic]] was framed." <ref>{{cite book|first=Nicholas | last=Higham |title="Designing stable algorithms" in Accuracy and Stability of Numerical Algorithms (2 ed)| publisher=SIAM|year=2002 | pages=43 }}</ref> | |||

In [[IEEE 754-2008]] the 128-bit base-2 format is officially referred to as '''binary128'''. | |||

{{Floating-point}} | |||

== IEEE 754 quadruple-precision binary floating-point format: binary128 == | |||

The IEEE 754 standard specifies a '''binary128''' as having: | |||

* [[Sign bit]]: 1 bit | |||

* [[Exponent]] width: 15 bits | |||

* [[Significand]] [[precision (arithmetic)|precision]]: 113 bits (112 explicitly stored) | |||

<!-- "significand", with a d at the end, is a technical term, please do not confuse with "significant" --> | |||

This gives from 33 - 36 significant decimal digits precision (if a decimal string with at most 33 significant decimal is converted to IEEE 754 quadruple precision and then converted back to the same number of significant decimal, then the final string should match the original; and if an IEEE 754 quadruple precision is converted to a decimal string with at least 36 significant decimal and then converted back to quadruple, then the final number must match the original <ref name=whyieee>{{cite web|url=http://www.cs.berkeley.edu/~wkahan/ieee754status/IEEE754.PDF|title=Lecture Notes on the Status of IEEE Standard 754 for Binary Floating-Point Arithmetic| author=William Kahan |date=1 October 1987}}</ref>). | |||

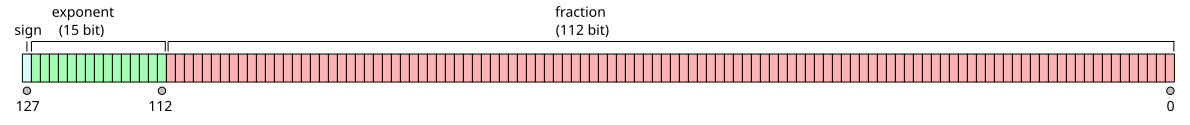

The format is written with an implicit lead bit with value 1 unless the exponent is stored with all zeros. Thus only 112 bits of the [[significand]] appear in the memory format, but the total precision is 113 bits (approximately 34 decimal digits, <math>\log_{10}(2^{113}) \approx 34.016</math>). The bits are laid out as follows: | |||

[[Image:IEEE 754 Quadruple Floating Point Format.svg]] | |||

A '''binary256''' would have 237 bits (approximately 71 decimal digits) and exponent bias 262143. | |||

=== Exponent encoding === | |||

The quadruple-precision binary floating-point exponent is encoded using an [[offset binary]] representation, with the zero offset being 16383; also known as exponent bias in the IEEE 754 standard. | |||

* E<sub>min</sub> = 0001<sub>16</sub>−3FFF<sub>16</sub> = −16382 | |||

* E<sub>max</sub> = 7FFE<sub>16</sub>−3FFF<sub>16</sub> = 16383 | |||

* [[Exponent bias]] = 3FFF<sub>16</sub> = 16383 | |||

Thus, as defined by the offset binary representation, in order to get the true exponent the offset of 16383 has to be subtracted from the stored exponent. | |||

The stored exponents 0000<sub>16</sub> and 7FFF<sub>16</sub> are interpreted specially. | |||

{|class="wikitable" style="text-align:center" | |||

! Exponent !! Significand zero !! Significand non-zero !! Equation | |||

|- | |||

| 0000<sub>16</sub> || [[0 (number)|0]], [[−0]] || [[subnormal numbers]] || <math>(-1)^{\text{signbit}} \times 2^{-16382} \times 0.\text{significandbits}_2</math> | |||

|- | |||

| 0001<sub>16</sub>, ..., 7FFE<sub>16</sub> ||colspan=2| normalized value || <math>(-1)^{\text{signbit}} \times 2^{{\text{exponentbits}_2} - 16383} \times 1.\text{significandbits}_2</math> | |||

|- | |||

| 7FFF<sub>16</sub> || ±[[infinity|∞]] || [[NaN]] (quiet, signalling) | |||

|} | |||

The minimum strictly positive (subnormal) value is 2<sup>−16493</sup> ≈ 10<sup>−4965</sup> and has a precision of only one bit, i.e. ± 2<sup>−16494</sup>. | |||

The minimum positive normal value is 2<sup>−16382</sup> ≈ 3.3621 × 10<sup>−4932</sup> and has a precision of 112 bits, i.e. ±2 <sup>−16494</sup> as well. | |||

The maximum representable value is 2<sup>16384</sup> - 2<sup>16272</sup> ≈ 1.1897 × 10<sup>4932</sup>. | |||

=== Quadruple-precision examples === | |||

These examples are given in bit ''representation'', in [[hexadecimal]], | |||

of the floating-point value. This includes the sign, (biased) exponent, and significand. | |||

3fff 0000 0000 0000 0000 0000 0000 0000 = 1 | |||

c000 0000 0000 0000 0000 0000 0000 0000 = -2 | |||

7ffe ffff ffff ffff ffff ffff ffff ffff ≈ 1.189731495357231765085759326628007 × 10<sup>4932</sup> (max quadruple precision) | |||

0000 0000 0000 0000 0000 0000 0000 0000 = 0 | |||

8000 0000 0000 0000 0000 0000 0000 0000 = -0 | |||

7fff 0000 0000 0000 0000 0000 0000 0000 = infinity | |||

ffff 0000 0000 0000 0000 0000 0000 0000 = -infinity | |||

3ffd 5555 5555 5555 5555 5555 5555 5555 ≈ 1/3 | |||

By default, 1/3 rounds down like [[double precision]], because of the odd number of bits in the significand. | |||

So the bits beyond the rounding point are <code>0101...</code> which is less than 1/2 of a [[unit in the last place]]. | |||

== Double-double arithmetic == | |||

A common software technique to implement nearly quadruple precision using ''pairs'' of [[double-precision]] values is sometimes called '''double-double arithmetic'''.<ref name=Hida>Yozo Hida, X. Li, and D. H. Bailey, [http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.4.5769 Quad-Double Arithmetic: Algorithms, Implementation, and Application], Lawrence Berkeley National Laboratory Technical Report LBNL-46996 (2000). Also Y. Hida et al., [http://web.mit.edu/tabbott/Public/quaddouble-debian/qd-2.3.4-old/docs/qd.pdf Library for double-double and quad-double arithmetic] (2007).</ref><ref name=Shewchuk>J. R. Shewchuk, [http://www.cs.cmu.edu/~quake/robust.html Adaptive Precision Floating-Point Arithmetic and Fast Robust Geometric Predicates], Discrete & Computational Geometry 18:305-363, 1997.</ref><ref name="Knuth-4.2.3-pr9">{{cite book |last=Knuth |first=D. E. |title=The Art of Computer Programming |edition=2nd |at=chapter 4.2.3. problem 9. }}</ref> Using pairs of IEEE double-precision values with 53-bit significands, double-double arithmetic can represent operations with at least<ref name=Hida/> a 2×53=106-bit significand (actually 107 bits<ref>Robert Munafo [http://mrob.com/pub/math/f161.html F107 and F161 High-Precision Floating-Point Data Types] (2011).</ref> except for some of the largest values, due to the limited exponent range), only slightly less precise than the 113-bit significand of IEEE binary128 quadruple precision. The range of a double-double remains essentially the same as the double-precision format because the exponent has still 11 bits,<ref name=Hida /> significantly lower than the 15-bit exponent of IEEE quadruple precision (a range of <math>1.8\times10^{308}</math> for double-double versus <math>1.2\times10^{4932}</math> for binary128). | |||

In particular, a double-double/quadruple-precision value ''q'' in the double-double technique is represented implicitly as a sum ''q''=''x''+''y'' of two double-precision values ''x'' and ''y'', each of which supplies half of ''q'''s significand.<ref name=Shewchuk/> That is, the pair (''x'',''y'') is stored in place of ''q'', and operations on ''q'' values (+,−,×,...) are transformed into equivalent (but more complicated) operations on the ''x'' and ''y'' values. Thus, arithmetic in this technique reduces to a sequence of double-precision operations; since double-precision arithmetic is commonly implemented in hardware, double-double arithmetic is typically substantially faster than more general [[arbitrary-precision arithmetic]] techniques.<ref name=Hida/><ref name=Shewchuk/> | |||

Note that double-double arithmetic has the following special characteristics:<ref>[http://pic.dhe.ibm.com/infocenter/aix/v7r1/index.jsp?topic=%2Fcom.ibm.aix.genprogc%2Fdoc%2Fgenprogc%2F128bit_long_double_floating-point_datatype.htm 128-Bit Long Double Floating-Point Data Type]</ref> | |||

* As the magnitude of the value decreases, the amount of extra precision also decreases. Therefore, the smallest number in the normalized range is narrower than double precision. The smallest number with full precision is 1000...0<sub>2</sub> (106 zeros) × 2<sup>−1074</sup>, or 1.000...0<sub>2</sub> (106 zeros) × 2<sup>−968</sup>. Numbers whose magnitude is smaller than 2<sup>−1021</sup> will not have additional precision compared with double precision. | |||

* The actual number of bits of precision can vary. In general, the magnitude of low-order part of the number is no greater than half ULP of the high-order part. If the low-order part is less than half ULP of the high-order part, significant bits (either all 0's or all 1's) are implied between the significant of the high-order and low-order numbers. Certain algorithms that rely on having a fixed number of bits in the significand can fail when using 128-bit long double numbers. | |||

* Because of the reason above, it is possible to represent values like 1 + 2<sup>−1074</sup>, which is the smallest representable number greater than 1. | |||

In addition to the double-double arithmetic, it is also possible to generate triple-double or quad-double arithmetic if higher precision is required without any higher precision floating-point library. They are represented as a sum of three (or four) double-precision values respectively. They can represent operations with at least 159/161 and 212/215 bits respectively. | |||

Similar technique can be used to produce a '''double-quad arithmetic''', which is represented as a sum of two quadruple-precision values. They can represent operations with at least 226 (or 227) bits.<ref>sourceware.org [http://sourceware.org/ml/libc-alpha/2012-03/msg01024.html Re: The state of glibc libm]</ref> | |||

==Implementations== | |||

Quadruple precision is almost always implemented in software by a variety of techniques (such as the double-double technique above, although that technique does not implement IEEE quadruple precision), since direct hardware support for quadruple precision is extremely rare. One can use general [[arbitrary-precision arithmetic]] libraries to obtain quadruple (or higher) precision, but specialized quadruple-precision implementations may achieve higher performance. | |||

===Computer-language support=== | |||

A separate question is the extent to which quadruple-precision types are directly incorporated into computer [[programming language]]s. | |||

Quadruple precision is specified in [[Fortran]] by the <code>real(real128)</code> (module <code>iso_fortran_env</code> from Fortran 2008 must be used, the constant <code>real128</code> is equal to 16 on most processors), or as <code>real(selected_real_kind(33, 4931))</code>, or in a non-standard way as <code>REAL*16</code>. (Quadruple-precision <code>REAL*16</code> is supported by the [[Intel Fortran Compiler]]<ref>{{cite web|title= Intel Fortran Compiler Product Brief |url=http://h21007.www2.hp.com/portal/download/files/unprot/intel/product_brief_Fortran_Linux.pdf|work=|publisher=Su|date=|accessdate=2010-01-23}}</ref> and by the [[gfortran|GNU Fortran compiler]]<ref>{{cite web|title= GCC 4.6 Release Series - Changes, New Features, and Fixes |url=http://gcc.gnu.org/gcc-4.6/changes.html|work=|publisher=|date=|accessdate=2010-02-06}}</ref> on [[x86]], [[x86-64]], and [[Itanium]] architectures, for example.) | |||

In the [[C (programming language)|C]]/[[C++]] with a few systems and compilers, quadruple precision may be specified by the [[long double]] type, but this is not required by the language (which only requires <code>long double</code> to be at least as precise as <code>double</code>), nor is it common. On x86 and x86-64, the most common C/C++ compilers implement <code>long double</code> as either 80-bit [[extended precision]] (e.g. the [[GNU C Compiler]] gcc<ref>[http://gcc.gnu.org/onlinedocs/gcc/i386-and-x86_002d64-Options.html i386 and x86-64 Options], ''Using the GNU Compiler Collection''.</ref> and the [[Intel C++ compiler]] with a <code>/Qlong‑double</code> switch<ref>[http://software.intel.com/en-us/articles/size-of-long-integer-type-on-different-architecture-and-os/ Intel Developer Site]</ref>) or simply as being synonymous with double precision (e.g. [[Microsoft Visual C++]]<ref>[http://msdn.microsoft.com/en-us/library/9cx8xs15.aspx MSDN homepage, about Visual C++ compiler]</ref>), rather than as quadruple precision. On a few other architectures, some C/C++ compilers implement <code>long double</code> as quadruple precision, e.g. gcc on [[PowerPC]] (as double-double<ref>[http://gcc.gnu.org/onlinedocs/gcc/RS_002f6000-and-PowerPC-Options.html RS/6000 and PowerPC Options], ''Using the GNU Compiler Collection''.</ref><ref>[http://developer.apple.com/legacy/mac/library/documentation/Performance/Conceptual/Mac_OSX_Numerics/Mac_OSX_Numerics.pdf Inside Macintosh - PowerPC Numerics]</ref><ref>[http://www.opensource.apple.com/source/gcc/gcc-5646/gcc/config/rs6000/darwin-ldouble.c 128-bit long double support routines for Darwin]</ref>) and [[SPARC]],<ref>[http://gcc.gnu.org/onlinedocs/gcc/SPARC-Options.html SPARC Options], ''Using the GNU Compiler Collection''.</ref> or the [[Sun Studio (software)|Sun Studio compilers]] on SPARC.<ref>[http://download.oracle.com/docs/cd/E19422-01/819-3693/ncg_lib.html The Math Libraries], Sun Studio 11 ''Numerical Computation Guide'' (2005).</ref> Even if <code>long double</code> is not quadruple precision, however, some C/C++ compilers provide a nonstandard quadruple-precision type as an extension. For example, gcc provides a quadruple-precision type called <code>__float128</code> for x86, x86-64 and [[Itanium]] CPUs,<ref>[http://gcc.gnu.org/onlinedocs/gcc/Floating-Types.html Additional Floating Types], ''Using the GNU Compiler Collection''</ref> and some versions of Intel's C/C++ compiler for x86 and x86-64 supply a nonstandard quadruple-precision type called <code>_Quad</code>.<ref>[http://software.intel.com/en-us/forums/showthread.php?t=56359 Intel C++ Forums] (2007).</ref> | |||

=== Hardware support === | |||

Native support of 128-bit floats is defined in [[SPARC]] V8<ref>{{cite book | |||

| title = The SPARC Architecture Manual: Version 8 | |||

| year = 1992 | |||

| publisher = SPARC International, Inc | |||

| url = http://www.sparc.com/standards/V8.pdf | |||

| accessdate = 2011-09-24 | |||

| quote = SPARC is an instruction set architecture (ISA) with 32-bit integer and 32-, 64-, and 128-bit IEEE Standard 754 floating-point as its principal data types. | |||

}}</ref> and V9<ref>{{cite book | |||

| title = The SPARC Architecture Manual: Version 9 | |||

| year = 1994 | |||

| editor = David L. Weaver, Tom Germond | |||

| publisher = SPARC International, Inc | |||

| url = http://www.sparc.org/standards/SPARCV9.pdf | |||

| accessdate = 2011-09-24 | |||

| quote = Floating-point: The architecture provides an IEEE 754-compatible floating-point instruction set, operating on a separate register file that provides 32 single-precision (32-bit), 32 double-precision (64-bit), 16 quad-precision (128-bit) registers, or a mixture thereof. | |||

}}</ref> architectures (e.g. there are 16 quad-precision registers %q0, %q4, ...), but no SPARC CPU implements quad-precision operations in hardware as of 2004.<ref>{{cite book | |||

| title = Numerical Computation Guide — Sun Studio 10 | |||

| chapter = SPARC Behavior and Implementation | |||

| year = 2004 | |||

| publisher = Sun Microsystems, Inc | |||

| url = http://download.oracle.com/docs/cd/E19059-01/stud.10/819-0499/ncg_sparc.html | |||

| accessdate = 2011-09-24 | |||

| quote = There are four situations, however, when the hardware will not successfully complete a floating-point instruction: ... The instruction is not implemented by the hardware (such as ... quad-precision instructions on any SPARC FPU). | |||

}}</ref> | |||

[[IBM_Floating_Point_Architecture#Extended-precision_128-bit|Non-IEEE extended-precision]] (128 bit of storage, 1 sign bit, 7 exponent bit, 112 fraction bit, 8 bits unused) was added to the [[IBM System/370]] series (1970s–1980s) and was available on some S/360 models in the 1960s (S/360-85,<ref>[http://dx.doi.org/10.1147/sj.71.0022 "Structural aspects of the system/360 model 85: III extensions to floating-point architecture"], Padegs, A., ''IBM Systems Journal'', Vol:7 No:1 (March 1968), pp. 22–29</ref> -195, and others by special request or simulated by OS software). IEEE quadruple precision was added to the [[S/390]] G5 in 1998,<ref>[http://domino.research.ibm.com/tchjr/journalindex.nsf/c469af92ea9eceac85256bd50048567c/6c80b870ebb2c30585256bfa0067f9e9!OpenDocument "The S/390 G5 floating-point unit", Schwarz, E. M. and Krygowsk, C. A., ''IBM Journal of Research and Development''], Vol:43 No: 5/6 (1999), p.707</ref> and is supported in hardware in subsequent [[z/Architecture]] processors.<ref>{{cite article|title=The IBM eServer z990 floating-point unit. IBM J. Res. Dev. 48; pp. 311-322|author=Gerwig, G. and Wetter, H. and Schwarz, E. M. and Haess, J. and Krygowski, C. A. and Fleischer, B. M. and Kroener, M.|date=May 2004}}</ref> | |||

Quadruple-precision (128-bit) hardware implementation should not be confused with "128-bit FPUs" that implement [[SIMD]] instructions, such as [[Streaming SIMD Extensions]] or [[AltiVec]], which refers to 128-bit [[Vector processor|vectors]] of four 32-bit single-precision or two 64-bit double-precision values that are operated on simultaneously. | |||

== See also == | |||

* [[IEEE 754-2008|IEEE Standard for Floating-Point Arithmetic (IEEE 754)]] | |||

* [[Extended precision]] (80-bit) | |||

* [[ISO/IEC 10967]], Language Independent Arithmetic | |||

* [[Primitive data type]] | |||

* [[long double]] | |||

== References == | |||

{{reflist|colwidth=30em}} | |||

== External links == | |||

* [http://crd.lbl.gov/~dhbailey/mpdist/ High-Precision Software Directory] | |||

* [http://sourceforge.net/p/qpfloat/home/Home/ QPFloat], a [[free software]] ([[GPL]]) software library for quadruple-precision arithmetic | |||

* [http://www.nongnu.org/hpalib/ HPAlib], a free software ([[LGPL]]) software library for quad-precision arithmetic | |||

* [http://gcc.gnu.org/onlinedocs/libquadmath libquadmath], the [[GNU Compiler Collection|GCC]] quad-precision math library | |||

* [http://babbage.cs.qc.cuny.edu/IEEE-754 IEEE-754 Analysis], Interactive web page for examining Binary32, Binary64, and Binary128 floating-point values | |||

[[Category:Binary arithmetic]] | |||

[[Category:Data types]] | |||

Latest revision as of 13:38, 24 August 2013

In computing, quadruple precision (also commonly shortened to quad precision) is a binary floating-point-based computer number format that occupies 16 bytes (128 bits) in computer memory and whose precision is about twice the 53-bit double precision.

This 128 bit quadruple precision is designed not only for applications requiring results in higher than double precision,[1] but also, as a primary function, to allow the computation of double precision results more reliably and accurately by minimising overflow and round-off errors in intermediate calculations and scratch variables: as William Kahan, primary architect of the original IEEE-754 floating point standard noted, "For now the 10-byte Extended format is a tolerable compromise between the value of extra-precise arithmetic and the price of implementing it to run fast; very soon two more bytes of precision will become tolerable, and ultimately a 16-byte format... That kind of gradual evolution towards wider precision was already in view when IEEE Standard 754 for Floating-Point Arithmetic was framed." [2]

In IEEE 754-2008 the 128-bit base-2 format is officially referred to as binary128.

IEEE 754 quadruple-precision binary floating-point format: binary128

The IEEE 754 standard specifies a binary128 as having:

- Sign bit: 1 bit

- Exponent width: 15 bits

- Significand precision: 113 bits (112 explicitly stored)

This gives from 33 - 36 significant decimal digits precision (if a decimal string with at most 33 significant decimal is converted to IEEE 754 quadruple precision and then converted back to the same number of significant decimal, then the final string should match the original; and if an IEEE 754 quadruple precision is converted to a decimal string with at least 36 significant decimal and then converted back to quadruple, then the final number must match the original [3]).

The format is written with an implicit lead bit with value 1 unless the exponent is stored with all zeros. Thus only 112 bits of the significand appear in the memory format, but the total precision is 113 bits (approximately 34 decimal digits, ). The bits are laid out as follows:

A binary256 would have 237 bits (approximately 71 decimal digits) and exponent bias 262143.

Exponent encoding

The quadruple-precision binary floating-point exponent is encoded using an offset binary representation, with the zero offset being 16383; also known as exponent bias in the IEEE 754 standard.

- Emin = 000116−3FFF16 = −16382

- Emax = 7FFE16−3FFF16 = 16383

- Exponent bias = 3FFF16 = 16383

Thus, as defined by the offset binary representation, in order to get the true exponent the offset of 16383 has to be subtracted from the stored exponent.

The stored exponents 000016 and 7FFF16 are interpreted specially.

| Exponent | Significand zero | Significand non-zero | Equation |

|---|---|---|---|

| 000016 | 0, −0 | subnormal numbers | |

| 000116, ..., 7FFE16 | normalized value | ||

| 7FFF16 | ±∞ | NaN (quiet, signalling) | |

The minimum strictly positive (subnormal) value is 2−16493 ≈ 10−4965 and has a precision of only one bit, i.e. ± 2−16494. The minimum positive normal value is 2−16382 ≈ 3.3621 × 10−4932 and has a precision of 112 bits, i.e. ±2 −16494 as well. The maximum representable value is 216384 - 216272 ≈ 1.1897 × 104932.

Quadruple-precision examples

These examples are given in bit representation, in hexadecimal, of the floating-point value. This includes the sign, (biased) exponent, and significand.

3fff 0000 0000 0000 0000 0000 0000 0000 = 1 c000 0000 0000 0000 0000 0000 0000 0000 = -2

7ffe ffff ffff ffff ffff ffff ffff ffff ≈ 1.189731495357231765085759326628007 × 104932 (max quadruple precision)

0000 0000 0000 0000 0000 0000 0000 0000 = 0 8000 0000 0000 0000 0000 0000 0000 0000 = -0

7fff 0000 0000 0000 0000 0000 0000 0000 = infinity ffff 0000 0000 0000 0000 0000 0000 0000 = -infinity

3ffd 5555 5555 5555 5555 5555 5555 5555 ≈ 1/3

By default, 1/3 rounds down like double precision, because of the odd number of bits in the significand.

So the bits beyond the rounding point are 0101... which is less than 1/2 of a unit in the last place.

Double-double arithmetic

A common software technique to implement nearly quadruple precision using pairs of double-precision values is sometimes called double-double arithmetic.[4][5][6] Using pairs of IEEE double-precision values with 53-bit significands, double-double arithmetic can represent operations with at least[4] a 2×53=106-bit significand (actually 107 bits[7] except for some of the largest values, due to the limited exponent range), only slightly less precise than the 113-bit significand of IEEE binary128 quadruple precision. The range of a double-double remains essentially the same as the double-precision format because the exponent has still 11 bits,[4] significantly lower than the 15-bit exponent of IEEE quadruple precision (a range of for double-double versus for binary128).

In particular, a double-double/quadruple-precision value q in the double-double technique is represented implicitly as a sum q=x+y of two double-precision values x and y, each of which supplies half of q's significand.[5] That is, the pair (x,y) is stored in place of q, and operations on q values (+,−,×,...) are transformed into equivalent (but more complicated) operations on the x and y values. Thus, arithmetic in this technique reduces to a sequence of double-precision operations; since double-precision arithmetic is commonly implemented in hardware, double-double arithmetic is typically substantially faster than more general arbitrary-precision arithmetic techniques.[4][5]

Note that double-double arithmetic has the following special characteristics:[8]

- As the magnitude of the value decreases, the amount of extra precision also decreases. Therefore, the smallest number in the normalized range is narrower than double precision. The smallest number with full precision is 1000...02 (106 zeros) × 2−1074, or 1.000...02 (106 zeros) × 2−968. Numbers whose magnitude is smaller than 2−1021 will not have additional precision compared with double precision.

- The actual number of bits of precision can vary. In general, the magnitude of low-order part of the number is no greater than half ULP of the high-order part. If the low-order part is less than half ULP of the high-order part, significant bits (either all 0's or all 1's) are implied between the significant of the high-order and low-order numbers. Certain algorithms that rely on having a fixed number of bits in the significand can fail when using 128-bit long double numbers.

- Because of the reason above, it is possible to represent values like 1 + 2−1074, which is the smallest representable number greater than 1.

In addition to the double-double arithmetic, it is also possible to generate triple-double or quad-double arithmetic if higher precision is required without any higher precision floating-point library. They are represented as a sum of three (or four) double-precision values respectively. They can represent operations with at least 159/161 and 212/215 bits respectively.

Similar technique can be used to produce a double-quad arithmetic, which is represented as a sum of two quadruple-precision values. They can represent operations with at least 226 (or 227) bits.[9]

Implementations

Quadruple precision is almost always implemented in software by a variety of techniques (such as the double-double technique above, although that technique does not implement IEEE quadruple precision), since direct hardware support for quadruple precision is extremely rare. One can use general arbitrary-precision arithmetic libraries to obtain quadruple (or higher) precision, but specialized quadruple-precision implementations may achieve higher performance.

Computer-language support

A separate question is the extent to which quadruple-precision types are directly incorporated into computer programming languages.

Quadruple precision is specified in Fortran by the real(real128) (module iso_fortran_env from Fortran 2008 must be used, the constant real128 is equal to 16 on most processors), or as real(selected_real_kind(33, 4931)), or in a non-standard way as REAL*16. (Quadruple-precision REAL*16 is supported by the Intel Fortran Compiler[10] and by the GNU Fortran compiler[11] on x86, x86-64, and Itanium architectures, for example.)

In the C/C++ with a few systems and compilers, quadruple precision may be specified by the long double type, but this is not required by the language (which only requires long double to be at least as precise as double), nor is it common. On x86 and x86-64, the most common C/C++ compilers implement long double as either 80-bit extended precision (e.g. the GNU C Compiler gcc[12] and the Intel C++ compiler with a /Qlong‑double switch[13]) or simply as being synonymous with double precision (e.g. Microsoft Visual C++[14]), rather than as quadruple precision. On a few other architectures, some C/C++ compilers implement long double as quadruple precision, e.g. gcc on PowerPC (as double-double[15][16][17]) and SPARC,[18] or the Sun Studio compilers on SPARC.[19] Even if long double is not quadruple precision, however, some C/C++ compilers provide a nonstandard quadruple-precision type as an extension. For example, gcc provides a quadruple-precision type called __float128 for x86, x86-64 and Itanium CPUs,[20] and some versions of Intel's C/C++ compiler for x86 and x86-64 supply a nonstandard quadruple-precision type called _Quad.[21]

Hardware support

Native support of 128-bit floats is defined in SPARC V8[22] and V9[23] architectures (e.g. there are 16 quad-precision registers %q0, %q4, ...), but no SPARC CPU implements quad-precision operations in hardware as of 2004.[24]

Non-IEEE extended-precision (128 bit of storage, 1 sign bit, 7 exponent bit, 112 fraction bit, 8 bits unused) was added to the IBM System/370 series (1970s–1980s) and was available on some S/360 models in the 1960s (S/360-85,[25] -195, and others by special request or simulated by OS software). IEEE quadruple precision was added to the S/390 G5 in 1998,[26] and is supported in hardware in subsequent z/Architecture processors.[27]

Quadruple-precision (128-bit) hardware implementation should not be confused with "128-bit FPUs" that implement SIMD instructions, such as Streaming SIMD Extensions or AltiVec, which refers to 128-bit vectors of four 32-bit single-precision or two 64-bit double-precision values that are operated on simultaneously.

See also

- IEEE Standard for Floating-Point Arithmetic (IEEE 754)

- Extended precision (80-bit)

- ISO/IEC 10967, Language Independent Arithmetic

- Primitive data type

- long double

References

43 year old Petroleum Engineer Harry from Deep River, usually spends time with hobbies and interests like renting movies, property developers in singapore new condominium and vehicle racing. Constantly enjoys going to destinations like Camino Real de Tierra Adentro.

External links

- High-Precision Software Directory

- QPFloat, a free software (GPL) software library for quadruple-precision arithmetic

- HPAlib, a free software (LGPL) software library for quad-precision arithmetic

- libquadmath, the GCC quad-precision math library

- IEEE-754 Analysis, Interactive web page for examining Binary32, Binary64, and Binary128 floating-point values

- ↑ Template:Cite web

- ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - ↑ Template:Cite web

- ↑ 4.0 4.1 4.2 4.3 Yozo Hida, X. Li, and D. H. Bailey, Quad-Double Arithmetic: Algorithms, Implementation, and Application, Lawrence Berkeley National Laboratory Technical Report LBNL-46996 (2000). Also Y. Hida et al., Library for double-double and quad-double arithmetic (2007).

- ↑ 5.0 5.1 5.2 J. R. Shewchuk, Adaptive Precision Floating-Point Arithmetic and Fast Robust Geometric Predicates, Discrete & Computational Geometry 18:305-363, 1997.

- ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - ↑ Robert Munafo F107 and F161 High-Precision Floating-Point Data Types (2011).

- ↑ 128-Bit Long Double Floating-Point Data Type

- ↑ sourceware.org Re: The state of glibc libm

- ↑ Template:Cite web

- ↑ Template:Cite web

- ↑ i386 and x86-64 Options, Using the GNU Compiler Collection.

- ↑ Intel Developer Site

- ↑ MSDN homepage, about Visual C++ compiler

- ↑ RS/6000 and PowerPC Options, Using the GNU Compiler Collection.

- ↑ Inside Macintosh - PowerPC Numerics

- ↑ 128-bit long double support routines for Darwin

- ↑ SPARC Options, Using the GNU Compiler Collection.

- ↑ The Math Libraries, Sun Studio 11 Numerical Computation Guide (2005).

- ↑ Additional Floating Types, Using the GNU Compiler Collection

- ↑ Intel C++ Forums (2007).

- ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - ↑ "Structural aspects of the system/360 model 85: III extensions to floating-point architecture", Padegs, A., IBM Systems Journal, Vol:7 No:1 (March 1968), pp. 22–29

- ↑ "The S/390 G5 floating-point unit", Schwarz, E. M. and Krygowsk, C. A., IBM Journal of Research and Development, Vol:43 No: 5/6 (1999), p.707

- ↑ Template:Cite article